- Work3 - The Future of Work

- Posts

- AI’s Next Leap: Human-Like Reasoning and Its Impact on Employment

AI’s Next Leap: Human-Like Reasoning and Its Impact on Employment

Philosophy Meets Technology: Amazon's New Tool and the 4,500-Year Coding Shortcut

Source: CodeSignal

Hello and welcome to this week’s edition - Let’s dive in!

Will AI Make The Leap Towards Human-Like Reasoning ?

Last week’s headline was hard to miss: OpenAI has launched its latest model, GPT o1, which introduces 'chain-of-thought' or 'human-like' reasoning capabilities. Unlike its predecessors, GPT o1 takes more time to generate responses as it meticulously works through multiple reasoning steps before arriving at an answer. Perhaps the OpenAI team took inspiration from my previous post on how AI can enhance thinking through the Socratic method? 😃

To visualize this paradigm shift, Jim Fan shared an insightful graphic where the strawberry symbolizes GPT o1, referencing its codename 'strawberry':

While today's focus isn't to go deeply into GPT o1, several questions have been on my mind regarding the widespread adoption of this approach:

Creativity and Innovation: How will reasoning AI reshape the boundaries of creativity and innovation in generative AI models?

User Experience: What changes will we see in the user experience when interacting with AI models that exhibit deep reasoning capabilities?

Long-Term Problem Solving: What new possibilities emerge when reasoning AI is given time to process complex problems over extended periods, such as days or weeks?

Resource Allocation: What considerations will companies need to make when balancing compute resources between training large reasoning models and running real-time inference?

Ethical Implications: How do reasoning models align with the broader, long-term vision of AI, and what new ethical questions arise from their capabilities?

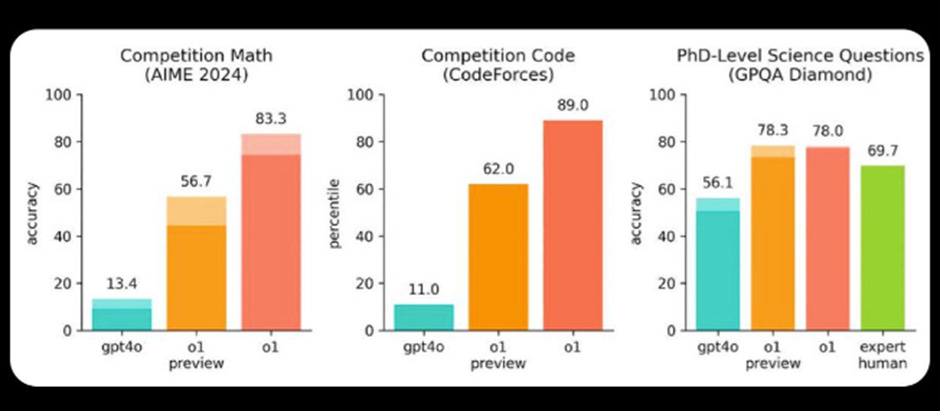

Additionally, referencing another comparison chart where GPT o1 outperforms expert humans on PhD-level science questions, I considered some key obstacles that this technology might face when operating autonomously:

Dependability Over Time: While GPT o1 may excel initially, maintaining reliability over extended use could be challenging, potentially leading to errors and inefficiencies in long-term workflows. This might require more human oversight than anticipated. How do we plan for this?

Handling Complex and Evolving Tasks: Although GPT o1 is proficient in specific areas, it may struggle with more intricate and dynamic problems, limiting its effectiveness in environments that require adaptability. As we move forwards we will need more complex tasks to be handled. It won’t be just about computing power (?)

Balancing Human Expertise and AI Assistance: An over-reliance on AI could result in a decline in human skills and expertise. This, in turn, will leave more room for errors. Who’s going to check this, as it increasingly becomes a black box? It’s more of a problem if the ‘inference’ stage can’t be analyzed.

Ongoing Maintenance and Fine-Tuning: To remain effective, models like GPT o1 need continuous retraining and updates. This requirement can escalate operational costs and complexity, will this be always positive in the cost/opportunity balance?

So how close is AI to the capability of human engineers?

A new study pits leading AI models against human engineers in real-world coding challenges. These challenges mirror the technical assessments used by major tech companies for hiring.

Key findings reveal:

Advanced AI models surpass the coding skills of average human engineers.

While top human coders still outperform the best AI models, the gap is narrowing.

AI models show dramatic improvement when given multiple attempts at a problem.

You can read the Open AI Report, from here.

And the AI Coding Benchmark with Human Comparison Report, here.

So are companies actually saving money with efficiencies driven by AI?

AmazonQ saves 4,500 years of Coding

Amazon CEO Andy Jassy talks about the impact of their AI coding Assistant, ‘Q’.

In his Linkedin post, he mentions that it helped the company upgrade its Java versions and saves a total of 4,500 years of coding. Also, in a leaked chat, Matt Garman, CEO of AWS, told employees that AI's growing role could fundamentally change what it means to be a software engineer. Suggesting that as AI takes over more of the coding work, developers will need to shift their focus from writing code to understanding customer needs and designing the end product.

"It just means that each of us has to get more in tune with what our customers need and what the actual end thing is that we're going to try to go build," Garman said.

AmazonQ Performing a Code Deployment

Techno-Magicians or Wild Extrapolation?

Of course, GPT o1 represents a significant advancement in AI tech. However, our inherent tendency to think linearly rather than exponentially can skew how we apply it. We're often so captivated by the allure of techno-magic that we implement it without fully understanding its benefits, similar to the initial excitement around GPT-3. As Andy noted in our last article, “Is AI Truly Revolutionary?” we are probably in the ‘Wild Extrapolation’ phase right now.

It's not to say there haven't been any, but our attraction to shiny new tools can make us overestimate our objectivity and rationality.

As Nassim Nicholas Taleb aptly puts it,

“what is rational is that which allows for survival… Anything that hinders one’s survival at an individual, collective, tribal, or general level is, to me, irrational.”

This perspective highlights that what we consider rational is often driven by survival instincts and economic imperatives, leading to the continuous creation of new products—even those we may not need. In our quest to stay ahead, we sometimes neglect to assess whether these innovations genuinely benefit us or if we're merely chasing the next big thing. Therefore, while new technologies like GPT o1 hold great promise, it's essential to critically evaluate their long-term benefits and potential pitfalls instead of getting swept up in the hype.

That’s all for this week - let me know if you have other interesting news or submissions you’d like to discuss in the comments!

Ciao,

Matteo